What is Regression?

We use this term very often in Machine Learning and Statistics.

What is the meaning of this term?

It is a method used in statistics to determine the relation between on variable (dependent) and the other variable (independent).

Literal meaning of regression is stepping back towards the average.

So , where from the term regression come into predictive models?!

Just out of Curiosity !

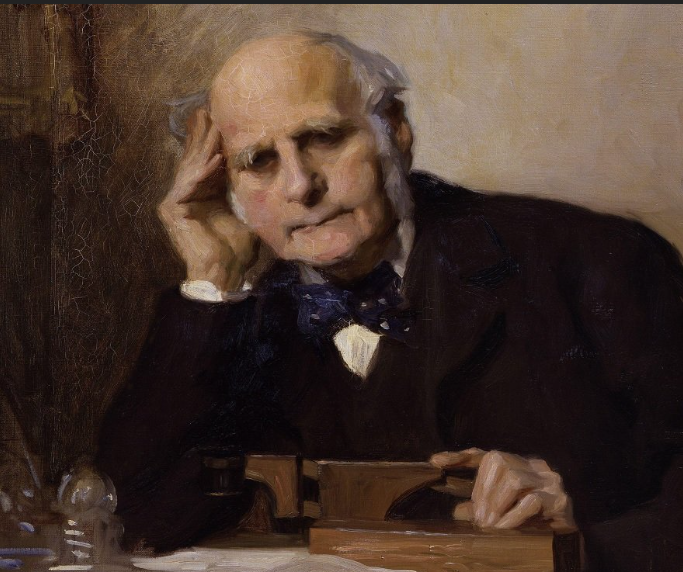

Sir Francis Galton, we credit him with the idea of statistical regression.

Galton reported his discovery that the heights of sons compared to their fathers was regressing to mediocrity or the average height.

Though most applications or regression analysis are little to do with regressing to mean, we still continue to use the term regression.

Simple Linear Regression:

We model a relationship between two variables.

Dependent, y

Independent, x

and hence, we call it simple Linear Regression

What is a statistical model?

A statistical model is a set of mathematical formulas and assumptions that describe a real- world situation.

We would like our model to capture as much as possible to explain the real world situation. However, there is uncertainty and hence, some errors will always remain.

The errors are seen as those caused due to unknown outside factors that affect the process generating our data.

A good statistical model is parsimonious . Meaning, it uses as few mathematical terms as possible.

So, it can be understood like this,

the statistical model breaks down the real world situation into systematic and non- systematic components. So the systematically occurring components are explained by math while the no- systematic changes are captured in the error

Assumptions:

A parametric model makes assumptions about data for the purpose of analysis. Linear Regression is a parametric approach.

It fails to deliver if the assumptions are not fulfilled. Hence it is important to validate these assumptions for the data set.

Linearity :

The relationship between X and Y should be linear .

In a data set, how to check if there is a linear relationship?

A simple scatter plot would give an idea. Pearson’s correlation coefficient will capture the linear relationship in a number.

What happens if the linearity assumption is violated?

If a non-linearly related data set is fit with a linear regression problem, the model will not be able to capture the non- linear relationship. Hence, the predictions will not be very great. On an unseen dataset, erroneous predictions will be made

No Multicollinearity :

Multicollinearity is a moderate to high correlation among the independent variables.

How to check for multicollinearity?

I’ve covered in detail about multicollinearity in this blog post. Do check it out.

What happens if we ignore multicollinearity?

If multicollinearity is there, it will be difficult to figure out the variable that is contributing to the output. The output and the parameters will have very high variability , in other words, this makes the variables less reliable.

Normality of residuals :

Residuals are the errors which represent a variation in Y that is not explained by the predictors.

How do we deal with these errors?

Probability comes in here.

Since our model, we hope, captures everything systematic in the data, the remaining random errors are due to a large number of minor factors that we cannot trace.

Rings any bell?

If a random variable is affected by many independent causes, effect of each cause is not very much compared to the overall effect. Then the variable will closely follow a normal distribution.

Vipanchi

If the model is really good, in other words, if the model can predict the y accurately, then the error will be Zero. Right?

If the model is really good, we can say, the mean of the error will be Zero and the rest of the errors will be concentrated around it.

Question to ponder on:

Isn't this the case with every prediction model? Why is this assumption specifically given for Linear Regression?

In the regression model, this assumption is made so that we can carry out statistical hypothesis tests using the F and t distributions

Aczel- Sounderpandian

How to check for normality of errors?

Q-Q plot

Shapiro-wilk test for small samples

( More about this will be added later )

No Auto Correlation between the residuals :

Autocorrelation means that the data is correlated with itself.

( Auto meaning self in Greek)

Auto correlation in errors reduces the standard error, resulting in underestimation of error in our model. This gives us narrower confidence intervals however, for a 95% confidence, the result confidence wouldn’t be really 95% .

How to check for autocorrelation?

Durbin – Watson statistic

( More will come about this after further study)

Understanding the model :

Y = mx+c form of a straight line.

The model is represented like this.

The non-random component of the straight line gives us the expected value of y or the average y for a given x.

Represented as

E(Y | X )

If the model is correct, the average value of Y for a given value of X falls right on the regression line.

This was the introduction to Linear Regression !

Happy Learning!!

🙂